Sourcegraph Amp in 5 minutes - The Good, the Bad and the Ugly

Is Amp, the new AI coding agent ready for Enterprise?

Sourcegraph has launched an exciting new product called Amp, currently in its Research Review phase. With Sourcegraph’s tagline on the website “Engineered for the Enterprise,” I was eager to try it and see if it could live up to that promise, especially in the world of Corporate Finance.

It’s early days, but Amp already shows real promise. It offers some unique and well-considered features that could genuinely set it apart from other AI coding assistants as it matures.

🌱

Ok, let’s walk through it.

Note: Before we dive in, it's worth noting: this version of Amp is Research Preview, and some rough edges are expected. I'm not focusing on missing functionality or bugs - my aim here is to evaluate the overall direction.

1. The Good Parts 💚

First Impressions: Setup Is Seamless

💚 Installation

Amp installation lives up to its promise - it is friendly and straightforward. It ships as an extension so you can keep your existing VS Code setup and enhance it with the Amp Panel. It works with the canary version VSCode (Insiders), also with all forks (Cursor, Windsurf, VSCodium, …etc.)

💚 CLI support

Amp is also available as a CLI tool, so you can use the full AI Coding Agent functionality, directly from the terminal!

💚 DevContainer Friendly

Amp integrates smoothly with devcontainers. Just add the extension and you're set:

"customizations": {

"vscode": {

"extensions": ["sourcegraph.amp"]

}

}💚 Quick Start

In under 5 minutes, I had Amp chat and CLI working in a devcontainer!

Great first impression!

Ok, let’s see the features!

Generous Context Window

💚 200K Tokens!

According to the Amp Owner’s Manual, Amp uses a fixed context window of 200K tokens and tries to “max out” this window to give the AI as much information as possible.

This is significantly larger than Cursor’s 128K limit for Claude 3.7 Sonnet, Gemini 2.5, GPT 4.1 or O4-mini.

As you work with Amp, each thread accumulates context. Amp provides 2 convenience methods to manage large contexts:

💚 Compact Thread - summarise the existing conversation to reduce token usage while preserving context

💚 New Thread with Summary - start fresh, beginning with a summary of the current conversation

MCP Support

💚 MCP Configuration

Amp offers a dedicated MCP configuration panel, accessible directly from the chat UI. Setup is smooth and intuitive.

💚 Built-in MCP Servers

Amp comes pre-bundled with a set of useful MCP servers to make development more convenient. For example, it can create mermaid charts directly in the chat panel to give you visual representation of any task you are working on.

Allowlists

💚 Command Allowlisting

Amp includes a great security feature: command allowlisting. You can define exactly which CLI commands the AI is permitted to run inside your project.

Allowlisted commands are stored in your repo as part of the project settings.

For enterprise use, this is a great foundation and I hope to see more fine grained tooling around permission management and regex pattern support.

Ok, now let’s look at the less desirable parts:

2. The Bad and the Ugly

As I mentioned earlier, Amp is still in an early development phase, so some rough edges and teething issues are to be expected.

Technology, especially in the AI space is moving extremely fast. Amp is goes going to get better and better and the Sourcegraph Team will rapidly resolve all teething issues.

When I wrote this article a month ago, my aim was to point out some of the areas that sparked debates in the engineering community. Of course, in engineering, every feature decision reflects a thoughtful tradeoff, and every limitation is the result of optimising something else.

Ultimately:

All Apps have limited number of existing features

and unlimited number of non-existing ones.

With that in mind, simply listing caveats or focusing on what’s missing wouldn’t do Amp justice - and it would ignore the thoughtful engineering behind many of these choices.

Instead, I want to highlight a couple of key areas that have sparked discussion, and explore both their advantages and current limitations.

Context Management

Context management is one of the hardest problems in AI coding assistants. Too little context, and the LLM lacks the necessary information to generate relevant and good quality code. Too much content, and you risk inefficient token usage and bloated context window with irrelevant data.

The Sourcegraph team is doing incredible work in this space:

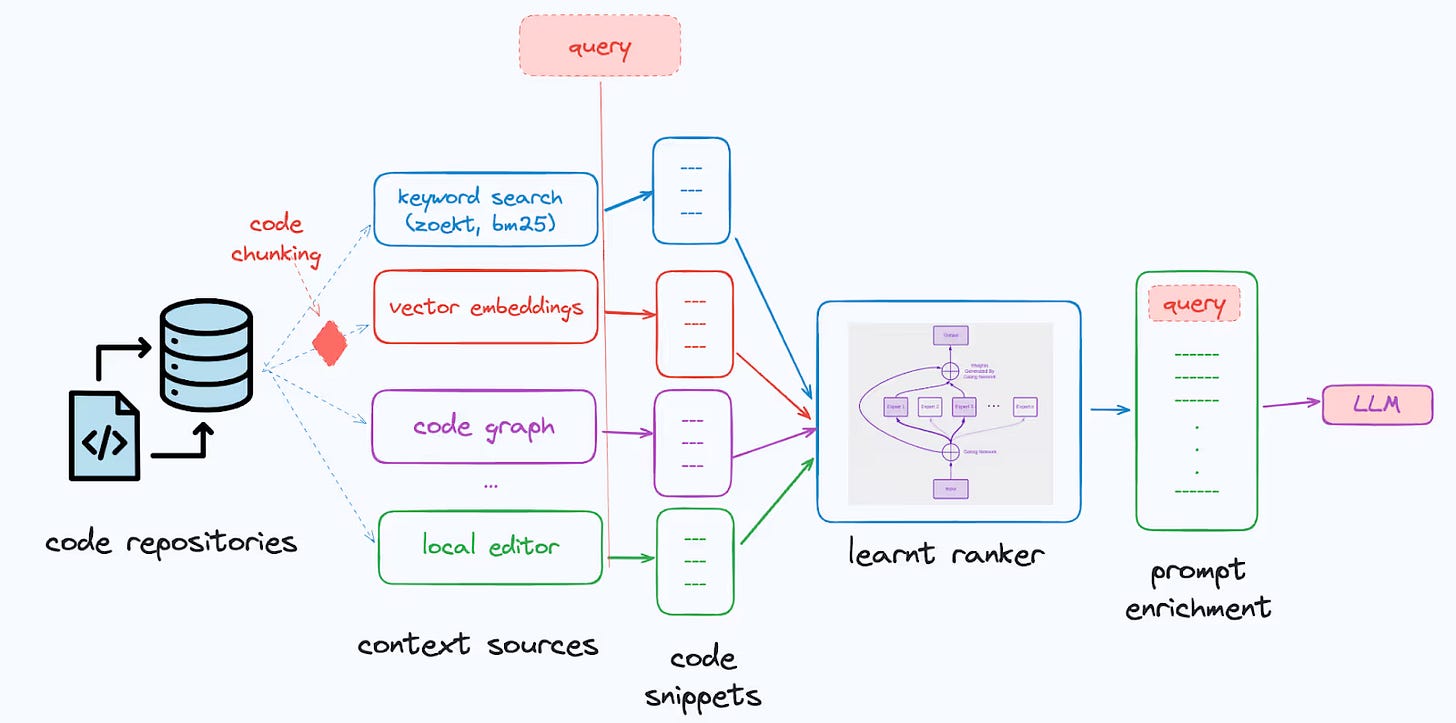

Sourcegraph’s blog post “Lessons from Building AI Coding Assistants: Context Retrieval and Evaluation” explains into how crucial context retrieval is when building an AI-powered coding assistant. It explains that simply feeding an LLM raw code isn’t enough - instead, you need a context engine that can intelligently retrieve and rank relevant snippets from your codebase (using methods like keyword and embedding search) while staying within latency and token constraints .

The post also highlights how evaluating these systems is tricky: you need to measure both the retrieval accuracy and the final quality of AI responses, even though getting ground-truth context is expensive. In short, the piece argues that robust search pipelines and careful evaluation frameworks are essential to turn LLMs into genuinely useful developer tools.

Resources:

Model Selection

Choosing the right model is a critical part of any AI workflow, especially in enterprise settings where auditability, compliance, and control are key.

As of May 2025, Amp is focused exclusively on Claude 3.7 Sonnet. It does not yet support custom model selection, bring-your-own-key setups, or private deployments.

This is a deliberate design choice. As the Amp documentation explains:

“We believe that building deeply into the model’s capabilities yields the best product, vs. building for the lowest common denominator across many models.”

There’s real merit in that approach. A tightly integrated model can unlock deep functionality, more consistent results, and better long-term optimisation.

That said, for many enterprise users, model flexibility is a core requirement. In some Financial orgs, only pre-approved and privately hosted models that already passed their compliance criteria can be used.

Competing tools like Cursor already support OpenRouter, direct Anthropic and OpenAI keys, and private models on hosted on Azure or AWS Bedrock.

I’m hopeful that as Amp matures, expanded model support will become part of its roadmap.

MCP Configuration

Amp includes a dedicated interface for setting up MCP tools, and the GUI makes initial setup quick and accessible.

One current limitation is that Amp does not yet support a project-specific mcp.json configuration file. All MCP settings are managed globally through the GUI, which means they can’t be versioned or tracked alongside your codebase.

This introduces a bit of “hidden state” - something many teams prefer to avoid in favour of transparent, file-based configuration.

That said, the existing interface is clean and user-friendly, and it’s easy to imagine mcp.json support arriving as the team expands project-scoped features. Adding this would align nicely with enterprise workflows and make the setup more reproducible across teams.

Thread Management

Amp automatically stores all Agent chat threads on Sourcegraph’s servers, making them accessible via your account at ampcode.com/threads. This obviously raises a number of concerns.

A local-first alternative would be storing threads inside the project directory (e.g. .threads). It would also allow teams to version, discard, gitignore or audit conversations as part of the normal workflow.

There are pros and cons for both.

Offering flexibility here would be a meaningful step forward, especially for teams in regulated or high-trust environments.

Leaderboards

Amp promotes “thread sharing” and “leaderboards.”

Although I can see the positive social element, it also feels completely out of place in professional settings.

In the age of Core4, DORA, SPACE, and DevEx, we’ve moved far beyond vanity metrics. Measuring productivity or encouraging competition based on lines of code or number of AI prompts risks incentivising the wrong behaviours - and misrepresents what good engineering looks like.

Training on Users’ Data - fixed! ✅

According to the Amp manual:

“Sourcegraph may use your data to fine-tune the model you are accessing unless you disable this feature.”

Update: This is now fixed and opt-out by default.

I’m very grateful for the Sourcegraph team to change this so fast.

The Verdict?

Amp is already a powerful AI coding assistant and is perfectly suitable for most projects. ❤️

With improvements around model support, better privacy and security controls, and a shift away from sending threads to sourcegraph servers, Amp could become a powerful player for enterprise level development, too.

Keep going, Sourcegraph - excited to see how Amp evolves from here! ✨

That’s it for now — thanks so much for reading! 💙